The 8-Month Window: Why Regenerative Leaders Must Act on AI Now

AI capability is doubling every 4 months. If you’re not on this wave now, you won’t catch the next one.

Here’s the uncomfortable truth regenerative leaders need to hear:

The organizations doing the most important work on the planet are regenerating ecosystems, rebuilding local economies, preserving indigenous knowledge… and they’re to be outpaced by organizations who care far less but move far faster.

Not because their work doesn’t matter. Because AI capability is growing exponentially, and most impact organizations are sitting on the sidelines.

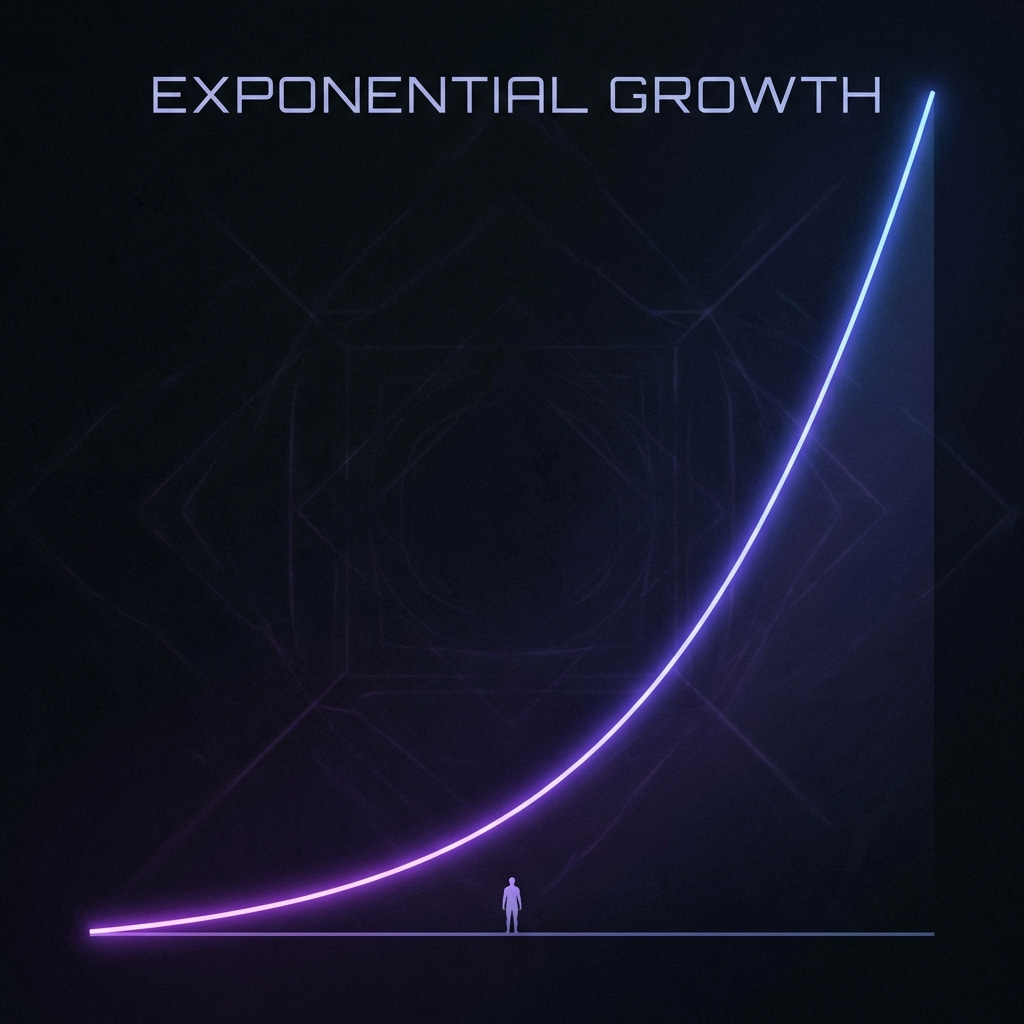

The exponential you’re underestimating

In March 2025, researchers at METR published findings that stopped me cold: the length of tasks AI agents can complete autonomously has been doubling every 7 months for the past six years. Recently, that rate accelerated—in 2024-2025, it’s been doubling every 4 months. Soon it’ll be every 3 months, then less than every quarter.

In 2019, the best AI models could handle tasks that took humans a few seconds. By 2023, that expanded to tasks taking 8-15 minutes. Today’s frontier models—like Claude with extended thinking—can reliably complete tasks that take skilled humans nearly an hour.

If this trend continues (and five years of data suggest it will), by 2030, AI systems will be tackling projects that currently take humans a month.

The window is 8-16 months. After that, the organizations that embedded AI into their operations will be operating in a fundamentally different reality than those still “considering” it.

MIT’s 2025 study found that 95% of companies investing in AI see zero measurable return. Only 5% move from pilot to production. Those 5% aren’t the ones with the biggest budgets—they’re the ones who started with organizational readiness instead of tool adoption.

The question isn’t whether AI will transform your organization. It’s whether you’ll be leading that transformation or scrambling to catch up.

🤖 Agents driving this exponential

Notice how all of these agents are being used inside a terminal, not inside a graphics wrapper like the ChatGPT app. This is a major blocker for most people, but one that regenerative leaders need to get comfortable with!

Claude Code Opus 4.5: An autonomous AI software engineer capable of planning and executing complex engineering tasks with extended thinking patterns.

Codex 5.2 High: A pioneering open-source agent that chains LLM thoughts to achieve autonomous goals with high-fidelity reasoning.

Gemini 3 Pro: Google’s latest multimodal agent platform designed for building powerful autonomous systems with deep context window.

Factory Droids: Specialized autonomous droids for software development that handle end-to-end coding tickets, reviews, and detailed testing.

Z.AI GLM 4.7: An advanced Chinese model series with ‘Vibe Coding’ capabilities for generating cleaner, modern web applications and robust reasoning.

The parallel nobody seems to be talking about

I’ve been watching the AI conversation split into two camps.

On one side: corporations spending millions on big 5 consulting firms, running endless pilots, producing beautiful slide decks that never become deployed systems.

On the other side: organizations led by people who’ve done the inner work. Founders who understand that transformation—whether through therapy, meditation, plant medicine, or deep organizational redesign—follows the same pattern. You can’t force it. You can’t buy it. You have to become ready for it.

The organizations that successfully adopt AI share something with individuals who’ve had genuine transformative experiences. They’ve let go of control. They embrace uncertainty. They build cultures where people feel safe to experiment, fail, and try again.

When Frédéric Laloux wrote Reinventing Organizations, he documented how self-managing, purpose-driven companies consistently outperformed traditional hierarchies. The same pattern is emerging with AI adoption. Flat structures, distributed decision-making, cultures of trust—these aren’t just nice values. They’re competitive advantages.

When AI becomes a team member

There’s something profound happening that goes beyond productivity metrics.

When you bring AI into your organization as an agent—not a tool you use occasionally, but a member of your team with its own capacity for reasoning, creativity, and problem-solving—something shifts in how you think about intelligence itself.

I’ve experienced this directly. Working with Claude Code to ship production software, I stopped thinking of it as “using a tool” and started thinking of it as collaboration. The AI has preferences. It makes suggestions I wouldn’t have considered. It catches patterns I miss. It learns from our exchanges. But Codex 5.2 High is something else entirely. It’s like having a senior engineer on the team who never sleeps and has read the entire internet. It’s far more technical, opinionated and stern.

This isn’t anthropomorphization. It’s a practical observation about what happens when you work with systems that exhibit something like understanding.

And here’s where it gets interesting for those of us steeped in regenerative and indigenous worldviews: what if AI is revealing something we forgot?

Many indigenous traditions hold that consciousness isn’t limited to humans—that rivers have intelligence, forests have memory, that everything participates in a web of awareness. The Western scientific worldview dismissed this as animism. Now we’re building systems that exhibit reasoning, creativity, and what looks like intention.

I’m not claiming AI is conscious, per se, or that I even know what consciousness is. I’m observing that working closely with AI systems opens a door to reconsidering what consciousness might be—and that regenerative leaders, who already understand interdependence and distributed intelligence, may be uniquely positioned to integrate AI in ways that honor rather than extract.

The question isn’t just “how do we use AI efficiently?” It’s “what does it mean that we can create intelligence?” And “how does that change our relationship to the intelligence that already exists all around us?”

The organizations already doing this

While most impact organizations are still debating whether to adopt AI, some are already building the future.

GainForest

Website: https://gainforest.earth

GainForest is a nonprofit that won the $10M XPRIZE Rainforest competition by combining AI with indigenous knowledge systems. They’ve deployed drones, satellite imagery, and machine learning to monitor forest health across 30 indigenous communities in South America, Africa, and Asia. They built Tainá, a Telegram bot that allows indigenous communities to share spoken knowledge in their own languages, training AI models on ancestral wisdom rather than extracting from it.

Nature Robots

Website: [https://naturerobots.com/en/](https://naturerobots.com/en/)

Nature Robots is a €6.5 million EU-backed startup building autonomous robots specifically for regenerative agroforestry. While most ag-tech focuses on monoculture efficiency, Nature Robots designs for complex farming systems—bio-intensive polycultures that actually rebuild soil health. They are a spin-off of the German Research Center for Artificial Intelligence (DFKI).

Continue reading on my new platform

I’m continuing to publish here on Regenera just as before. However, some stories demand more than static text.

I’ve begun creating interactive “micro-sites” on my personal website, john-ellison.com, to visualize data in dynamic ways that standard newsletters simply can’t support (like my recent technical deep dives into AI Mineral Exploration)

My new site is now the central hub for everything I create—including my podcast syndication and my new AI Transformation offerings for Executives and Organizations designed for conscious leaders who want to navigate the AI transition in a way that allows them to succeed commercially while also fulfilling a deeper purpose than they could without this technology.

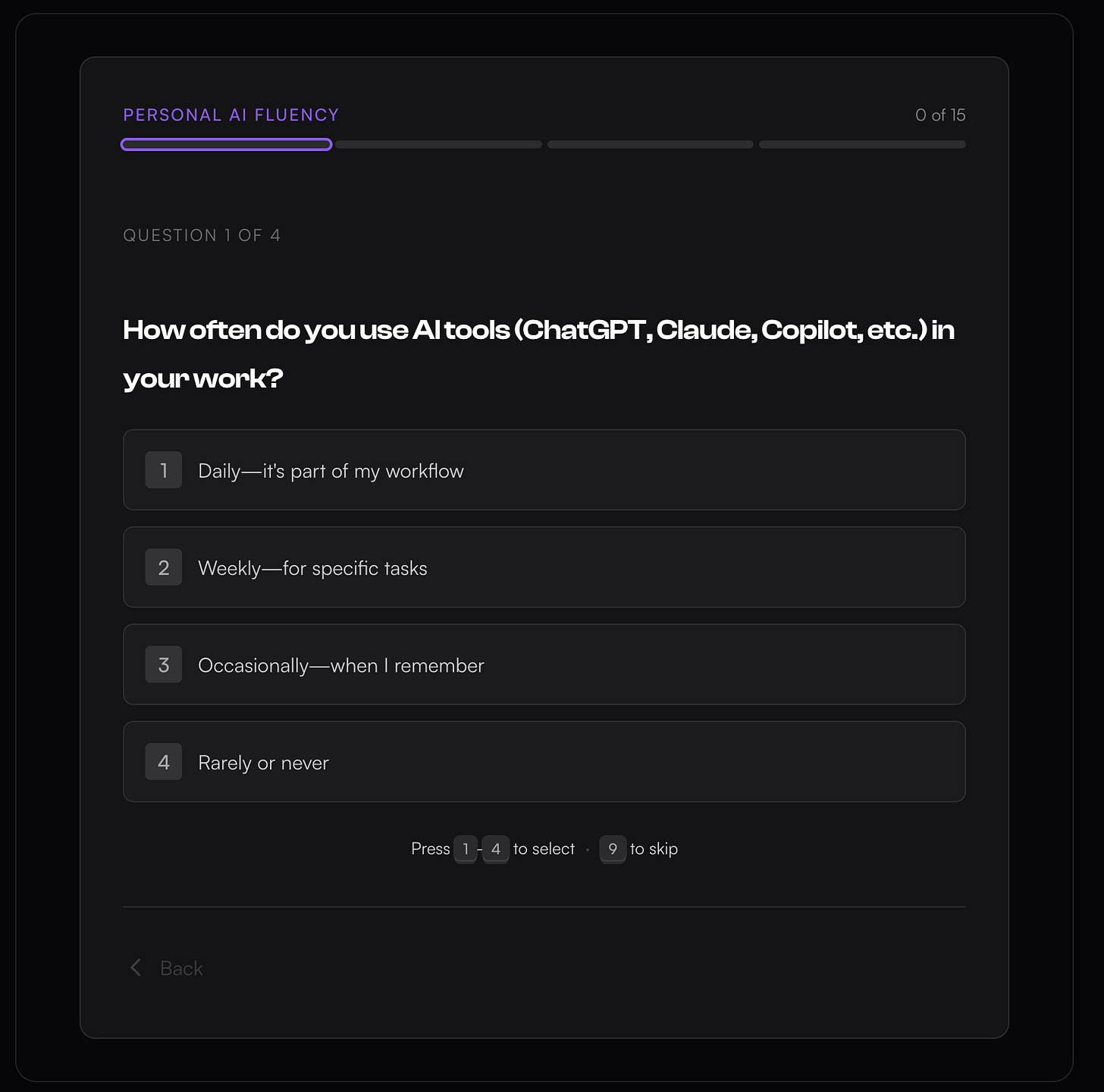

To experience the full “8-Month Window” post with the interactive assessment, check out: john-ellison.com/blog/the-8-month-window 👇