Building Fast, Staying Human: What Six Months of AI Development Taught Me About Real Impact

On navigating the tension between shipping code at AI speed and creating solutions that actually serve communities

What if the regenerative movement was looking in all the wrong places for the right solutions to the challenges we face as a species?

I’ve been building AI-powered products for six months now. Claude Code. Cursor. Opencode. The whole stack. It’s given me superpowers I wish I had my entire career.

But here’s what nobody talks about in all the “AI will 10x your productivity” discourse: speed isn’t impact. And the faster I can build, the easier it becomes to build the wrong thing. But the reality is, this technology also makes building products more accessible by at least 100x.

This tension lives in my work every day. I’m teaching by doing, in service to communities—building tools for regenerative projects, climate initiatives, and collaborative platforms. The AI capabilities are incredible. But they’re also dangerous if we’re not intentional about what we’re accelerating toward.

The speed trap

Two years ago I stepped away from leadership at ReFi DAO to explore a creative sabbatical where I began retraining on AI-assisted development. The technical learning curve was actually enjoyable.

When you can vibe-code a prototype in an afternoon, when Claude Code Sonnet 4.5 can scaffold an entire feature set while you’re making coffee, the limiting factor stops being execution capacity. It becomes taste, judgment, and understanding what actually serves the people you’re building for.

The question I keep coming back to: Does this technology serve life?

What AI acceleration actually reveals

Here’s what became clear while building Saraven, my marketing platform for impact founders in the Agentic Era: AI doesn’t just speed up your existing workflow… It exposes every gap in your understanding.

AI can help you fail faster—which is good if you’re learning from those failures. But it can also help you build faster than you can think—which means creating tools that technically work but don’t actually serve anyone.

The “give-first” model as a constraint

My approach has always been teaching by doing, in service to others. This isn’t just philosophy—it’s a practical constraint that keeps me honest.

When I’m building for a specific community or project, when I know the people who will use what I create, when I’m embedded in the actual context of use—the speed becomes an asset. I can iterate quickly *because* I have tight feedback loops with real humans.

But when I’m just building in the abstract, when it’s a generic solution or a tool for “anyone who needs X”—that’s when AI acceleration becomes risky. You can build a lot of impressive technical infrastructure that serves no one in particular.

The distributed life and why it matters for AI builders

Something shifted over the past decade that we’re still processing. The institutions that used to provide meaning in developed nations—community groups, religious organizations—have largely dissolved.

Now most people in the Western world look to their work for everything: income, meaning, community, purpose, stability. Add AI to this mix, and the pressure intensifies. Technology is supposed to give us more time, more freedom, more capacity. But for what?

I see this tension in the communities I work with. Climate projects need better tools, yes. But they also need spaces for people to actually be together, to build relationships, to find meaning in collective work. If my AI solution makes their work more efficient but leaves them feeling more isolated—what have I actually created?

The three-day workweek question

There’s talk about AI reducing work to three days a week. Maybe that’s coming, maybe not. But here’s the more interesting question: What will people do with that time?

If we build AI that just makes existing work faster without asking what work is *for*, we’re solving the wrong problem. The opportunity isn’t to work less. It’s to work differently—on things that require uniquely human capacities that AI can’t replicate.

Things like:

Building trust through presence

Making ethical decisions in gray areas

Understanding emotional nuance

Creating meaning through service

Mentoring and passing on embodied knowledge

This is where my “teaching by doing” approach matters. The AI can help me build tools, but the teaching part—the relationship building, the contextual understanding, the wisdom transfer—that’s irreplaceable.

What I’m learning about intentional acceleration

After six months of building AI-powered products, here’s what’s becoming clear:

The venting test: When something challenging happens, do people reach out to real humans or post to the void? If my tool provides a substitute for human connection rather than enabling it, I’m building the wrong thing.

The context-dependent approach: AI is incredible for some tasks and terrible for others. The skill isn’t in maximizing AI usage—it’s in knowing when to deploy it and when to prioritize human judgment.

The transparency requirement: Trust comes from honesty about limitations, not just capabilities. When I’m building for communities, they need to know what the AI can and cannot do, where human oversight matters, and how their agency is preserved.

Real examples from my work

Building the YouTube clipper integration for Saraven taught me this viscerally. I discovered oh-my-opencode, a plugin that orchestrates multiple AI agents in parallel. Game-changing for development productivity—reduced my code from 400+ lines to 120 lines of clean implementation.

But the technical win was easy compared to the harder questions: Does automating content atomization serve creators, or does it just add to the noise? Does extracting “brand DNA” from domains help people find their authentic voice, or does it homogenize everything?

I don’t have perfect answers. But I know the questions matter more than the speed of execution.

Building AI solutions that enhance human capacity

The regenerative work I’ve been part of—through ReFi DAO, climate crypto communities, supporting Amazigh women’s ancestral knowledge projects—taught me something crucial: real impact comes from amplifying community agency, not replacing it with technical solutions.

This applies to AI development too. The question isn’t “what can AI do?” but “what uniquely human work becomes possible when AI handles the repetitive stuff?”

When I’m building tools for climate projects, I’m not trying to automate decision-making or replace community discussion. I’m trying to free up capacity for the conversations that matter—the relationship building, the trust formation, the collective sense-making that humans are uniquely good at.

AI as infrastructure, not replacement. That’s the frame that keeps me honest.

The tunnel we’re walking through

We’re in a weird transition moment. AI capabilities are accelerating faster than our social and organizational structures can adapt. Jobs are changing, skills are being devalued and revalued constantly, and nobody really knows what the next five years look like.

I don’t know how long we’ll be in this tunnel. But I know that if we focus on building AI that serves human connection rather than replacing it, we’ll come out the other side better than we went in.

This means:

Shipping code so fast it gives us more time to connect deeper to the people it serves

Building technical solutions while preserving human agency

Accelerating execution without rushing past wisdom

Teaching by doing, always in service to life

What’s next?

I’ve built a free online course teaching impact founders, regen leaders and indigenous land stewards how to build products with AI. It’s 14-days and runs on a cohort basis. If you haven’t jumped into vibe coding yet, give it a try!

For those who want to go deeper, faster, I have an immersive program called Vibe Sprint Academy where I personally teach you how to define a product and build it with AI. Then walk you through launching and testing it with real users. It’s $999, and compresses 16 years of startup work, three acquisitions, training with Dr. BJ Fogg at Stanford and learning Design Sprints from Jake Knapp at Google Ventures—all of this knowledge compressed into one intensive program that you can do online, in your own time.

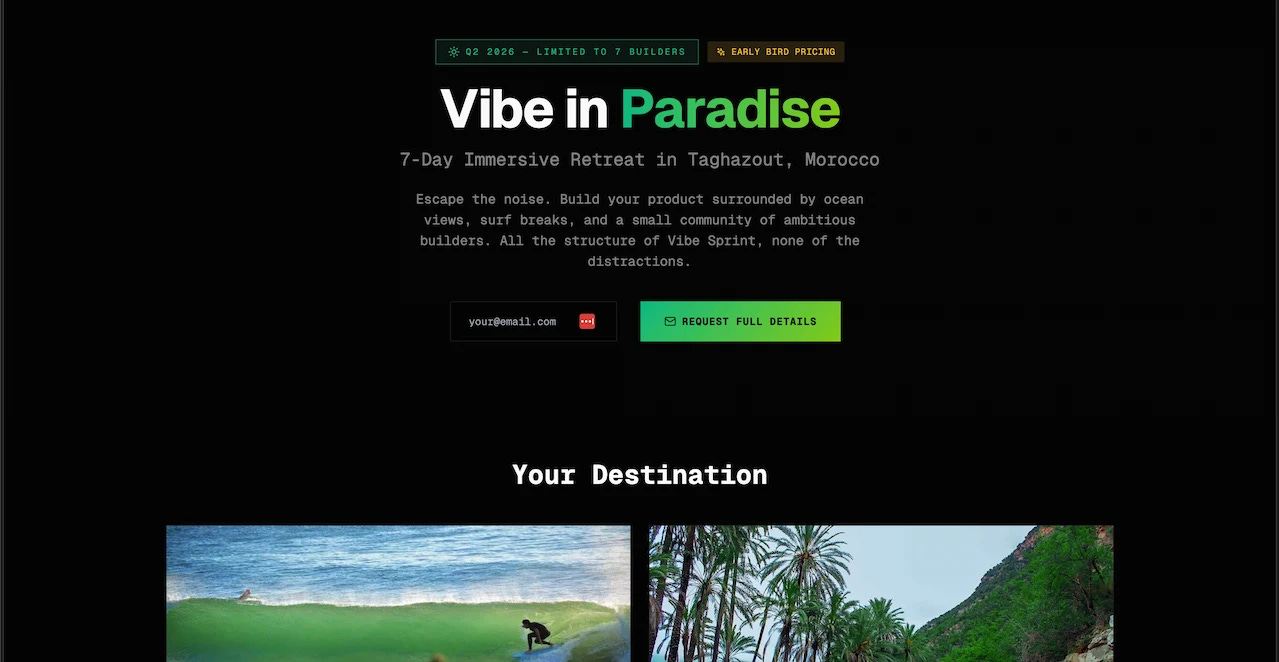

If you prefer the in-person vibe (I do), I’m launching Vibe in Paradise—a 7-day immersive retreat in Taghazout, Morocco. Teaching PMs and builders to ship with Claude Code from a mountainside Berber villa overlooking the ocean.

We’ve designed it as a space to build your actual product while figuring out what’s worth building in the first place. Seven days, 6-7 amazing builders, daily surf and yoga, meals by a local Amazigh chef, and the room to think while you ship.

The real skill isn’t coding faster. It’s building things that matter.

If you’re navigating this same territory (vibe coding x impact), I’d love to hear what you’re up to!